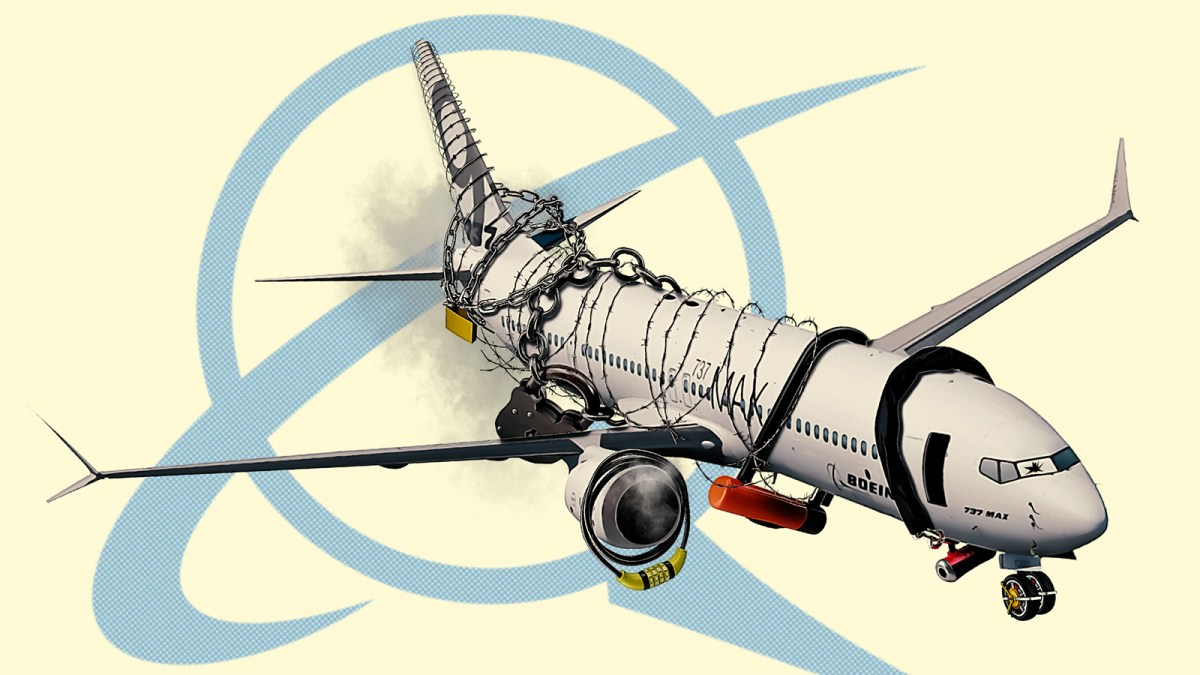

MAE 4300 Boeing 737 Max Case Study

Synopsis of Crashes

The Boeing 737 MAX crashes in 2018 (Lion Air Flight 610) and 2019 (Ethiopian Airlines Flight 302) were largely linked to a new flight-control feature called the Maneuvering Characteristics Augmentation System (MCAS). MCAS was designed to automatically push the aircraft’s nose down if it sensed a high angle of attack, to prevent a stall, but it relied on data from just one sensor and could activate erroneously. In both crashes faulty sensor data repeatedly triggered MCAS, driving the nose down despite pilots’ efforts to counteract it. Pilots were not adequately trained on MCAS, and it was not clearly documented in manuals, contributing to loss of control and the deaths of all passengers and crew on both flights.

TLDR Conclusions found at the bottom.

The Boeing 737 MAX crisis centers on two fatal crashes—Lion Air Flight 610 in October 2018 and Ethiopian Airlines Flight 302 in March 2019—which together killed 346 people. Both tragedies were linked to a flight-control feature called the Maneuvering Characteristics Augmentation System (MCAS), a software element designed to automatically push the aircraft’s nose down when sensors indicated an excessively high angle of attack. Because MCAS originally relied on a single sensor input, erroneous readings repeatedly triggered the system, forcing nose-down commands that pilots struggled to counteract, ultimately leading to catastrophic loss of control. Compounding the technical issue, MCAS was not clearly documented for pilots, who lacked adequate training on its existence and behavior, contributing to the inability to diagnose and respond to its unexpected activations.

In studying the crisis, a structured approach was taken to pinpoint the most critical ethical and technical issues. First, the case study identified ten potential ethical questions that captured the core decision points and failures in the design, certification, and operational use of the 737 MAX. These included whether Boeing should have been allowed to waive certain sensor test results, the prioritization of profit over safety, the limited disclosure of MCAS details in pilot manuals, and whether regulators were too reliant on Boeing’s own oversight processes. Systematically narrowing these issues revealed that three major areas were most significant: the reliance on faulty sensor data without adequate redundancy, the omission of MCAS from pilot training and manuals, and regulatory outsourcing that may have reduced independent scrutiny.

Next came detailed ethical analysis using standards like the ASME engineering canons, which emphasize the paramount importance of public safety in engineering practice. For example, the case study argued that allowing a single angle-of-attack sensor known to be unreliable to feed MCAS violated core ethical responsibilities to ensure safety. Similarly, withholding full information about MCAS from pilots limited their ability to make informed decisions in critical situations, conflicting with obligations to fully disclose risks and operational details in training materials. The outsourcing of inspection and certification to Boeing personnel also raised concerns about conflicts of interest that may have led to insufficient oversight.

-

Finally, the study concluded with five key lessons that should guide future practice:

-

Regulators like the FAA should not outsource essential safety oversight to manufacturers;

-

MCAS and other critical systems must be fully documented and integrated into pilot manuals and training;

-

After the first crash, 737 MAX aircraft should have been grounded pending investigation of failure modes;

-

Known sensor failures should have prompted corrective technical action before further flights;

-

Pilot feedback indicating problems should have been taken seriously rather than dismissed in favor of production schedules.

Overall, this case underscores how technical design decisions, regulatory processes, and ethical responsibilities intersect in complex engineered products. While MCAS was intended to address aerodynamic changes in the 737 MAX, its flawed implementation and communication failures contributed to tragedy. Subsequent actions, such as updated MCAS logic requiring dual-sensor agreement and preventing repeated nose-down commands, along with changes to training and documentation, aim to prevent similar failures in the future.

TLDR: I concluded 5 things that should have been done differently regarding the crashes:

- The FAA should not have been allowed to outsource the regulations to Boeing. They should have done the regulating themselves.

- MCAS should have been fully detailed and included in the manual to uphold public safety and welfare.

- Boeing or the FAA should have formally investigated and grounded the 737 Max planes after the first crash.

- Boeing should have properly addressed the faulty angle of attack results and replaced the other faulty sensors.

- Boeing should have listened and taken the pilot feedback into consideration with their design choices.

Technologies Used:

Back to Projects